Benchmarking models

Run your own on-device benchmarks of your models.

Embedl Hub lets you run benchmarks of your models on real devices in the cloud, such as smartphones and tablets. In this guide, you will learn how to create benchmarks, and how to view and interpret the results.

How it works

When you benchmark a model using Embedl Hub, the following happens:

- The model is uploaded to the cloud

- Target device is provisioned (job is queued if all devices are busy)

- Model is transferred to the target device

- Model is profiled using the target runtime, measuring various performance metrics

- Results are made available on the run details page

Creating a benchmark run

These instructions assume you have already installed and configured the Embedl Hub Python library. Refer to the setup guide for instructions.

Create a new benchmark run by using the embedl-hub benchmark command. Provide

a file path to the model and the name of the target device.

embedl-hub benchmark \ --model model.tflite \ --device "Samsung Galaxy S24"A link to the run details page is provided in the console output. Click here to open the page in your web browser:

[00:00:00] Profiling mobilenetv2.tflite on Samsung Galaxy S25[00:00:00] Running command with project name: Image classification Running command with experiment name: MobileNetV2 Track your progress here.Supported runtimes and model formats

The Embedl Hub device cloud supports benchmarking .tflite models using LiteRT. You can convert an ONNX model to

the .tflite format by using the CLI:

embedl-hub compile --model model.onnxSupport for additional runtimes and formats is coming soon. If you need another runtime or model format, refer to the documentation on remote hardware clouds for instructions on how to benchmark it using a third-party provider.

Supported target devices

See the list of supported devices in Embedl Hub by visiting the supported devices page or by using the CLI:

embedl-hub list-devicesViewing benchmark results

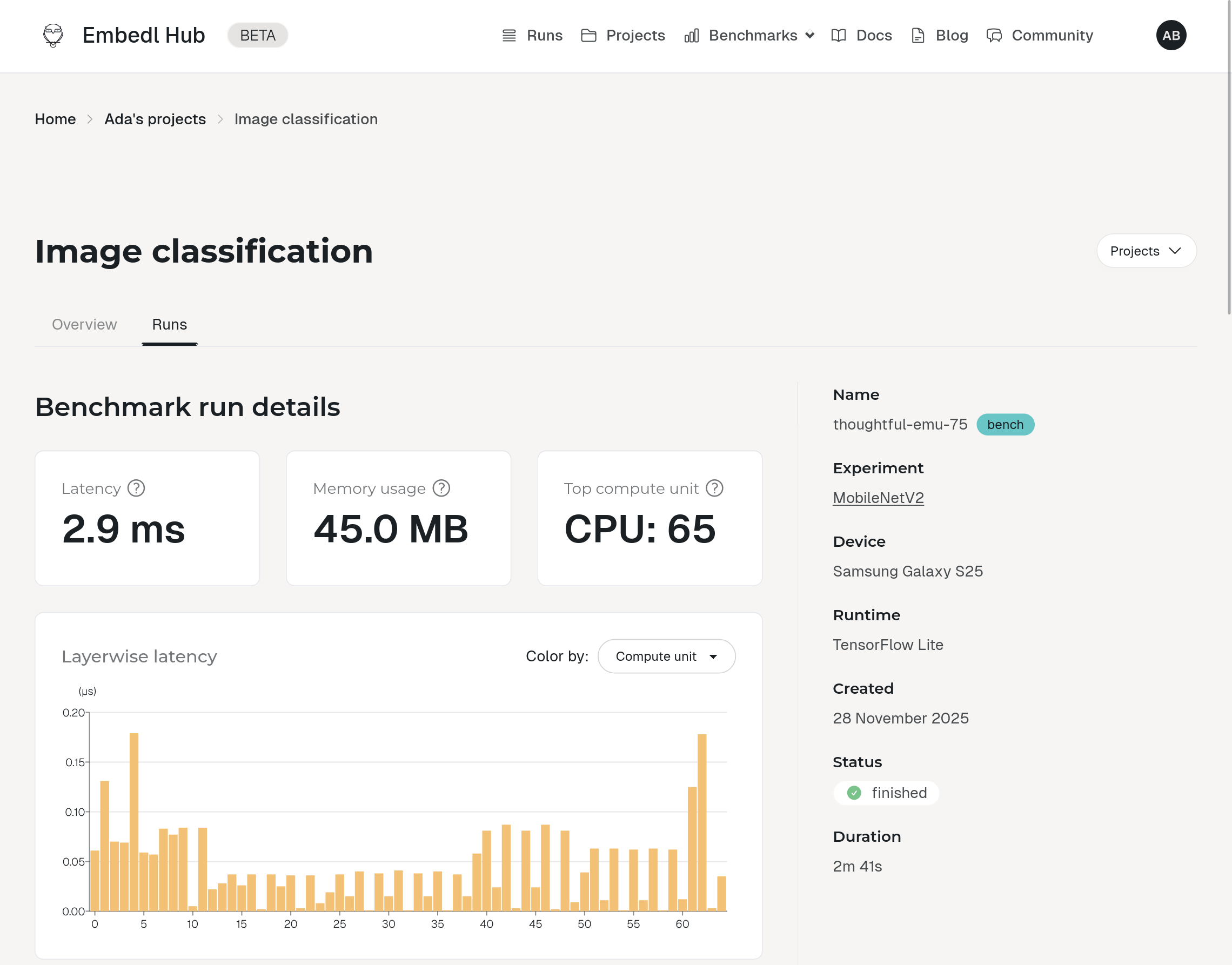

View the results of your benchmark runs on the run details page for each run. Find a link to the run details page in the CLI output after creating a benchmark run.

The run details page displays various performance metrics including:

- Latency: The average time taken to perform a single inference on the target device, measured in milliseconds (ms).

- Memory usage: The peak memory consumption during inference, measured in megabytes (MB).

- Top compute unit: The number of layers executed on the top compute unit (e.g., CPU, GPU, NPU) during inference.

The run details page also provides more a more detailed breakdown of your performance.

Layerwise latency

The layerwise latency plot provides a breakdown of latency by individual layers in the model, sorted in topological order. This shows the distribution of latency across the model structure.

Hover over individual bars to see the layer name and latency. Click on a bar to highlight the corresponding layer in the model summary table below.

Operation type breakdown

The operation type pie chart has two modes, showing a breakdown by operation type:

- Latency: Total latency contributed by each type.

- Layer count: The number of layers of each type.

Compute units

Breakdown of number of layers executed on each compute unit (e.g., CPU, GPU, NPU). This helps understand how effectively the model leverages the available hardware.